UR5e with a gripper: a 1D control problem

Allegro Hand working

Coarse Shadow Hand movements

One of the worst results during iteration was IK tasks that led to inverse movement: Extension of teleoperator's fingers caused bending of the Shadow Hand’s fingers, and vice versa.

This happens when you load the Shadow Hands directly from MuJoCo menagerie. You can fix this in code (what we were doing first) or you can edit the XML.

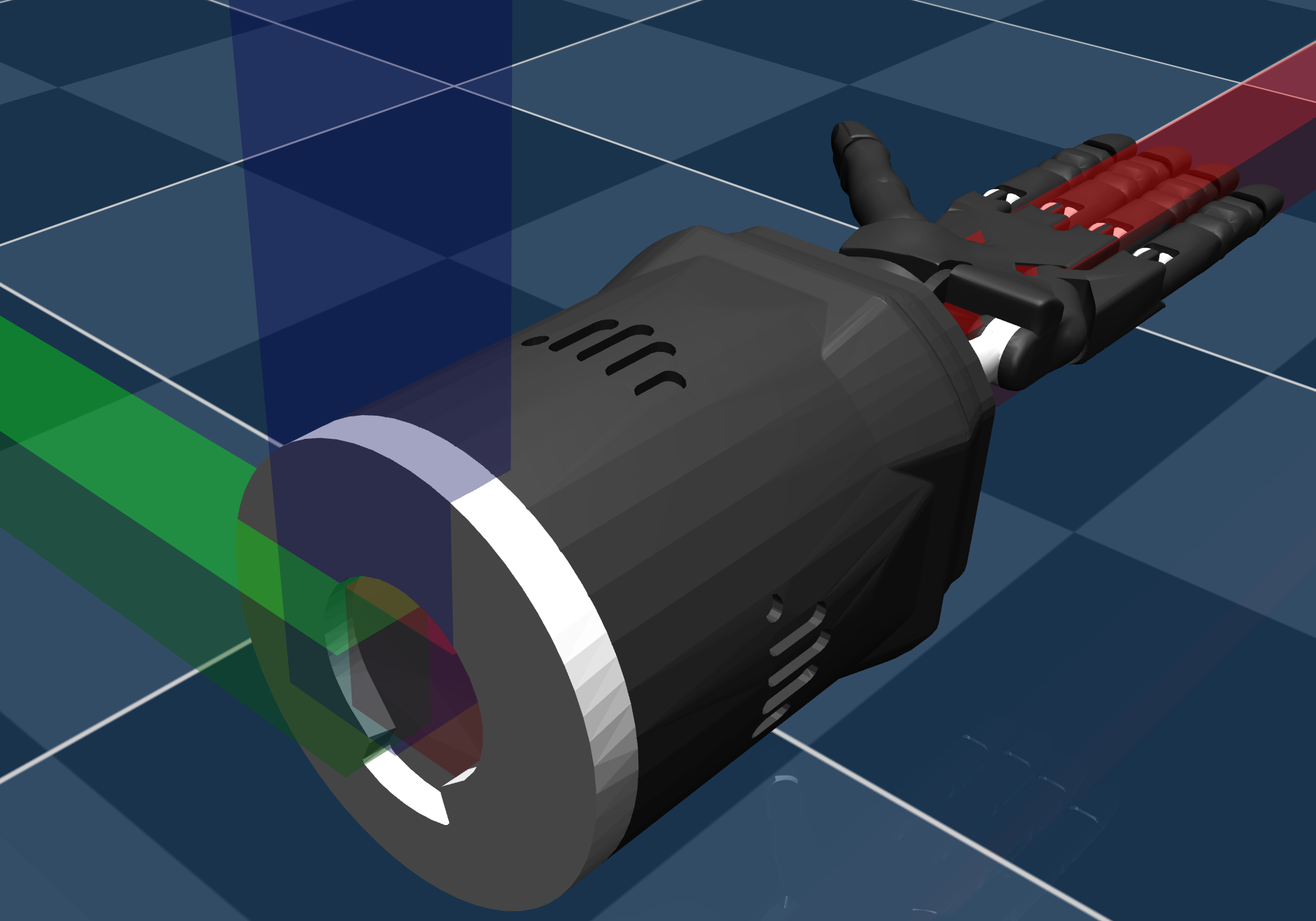

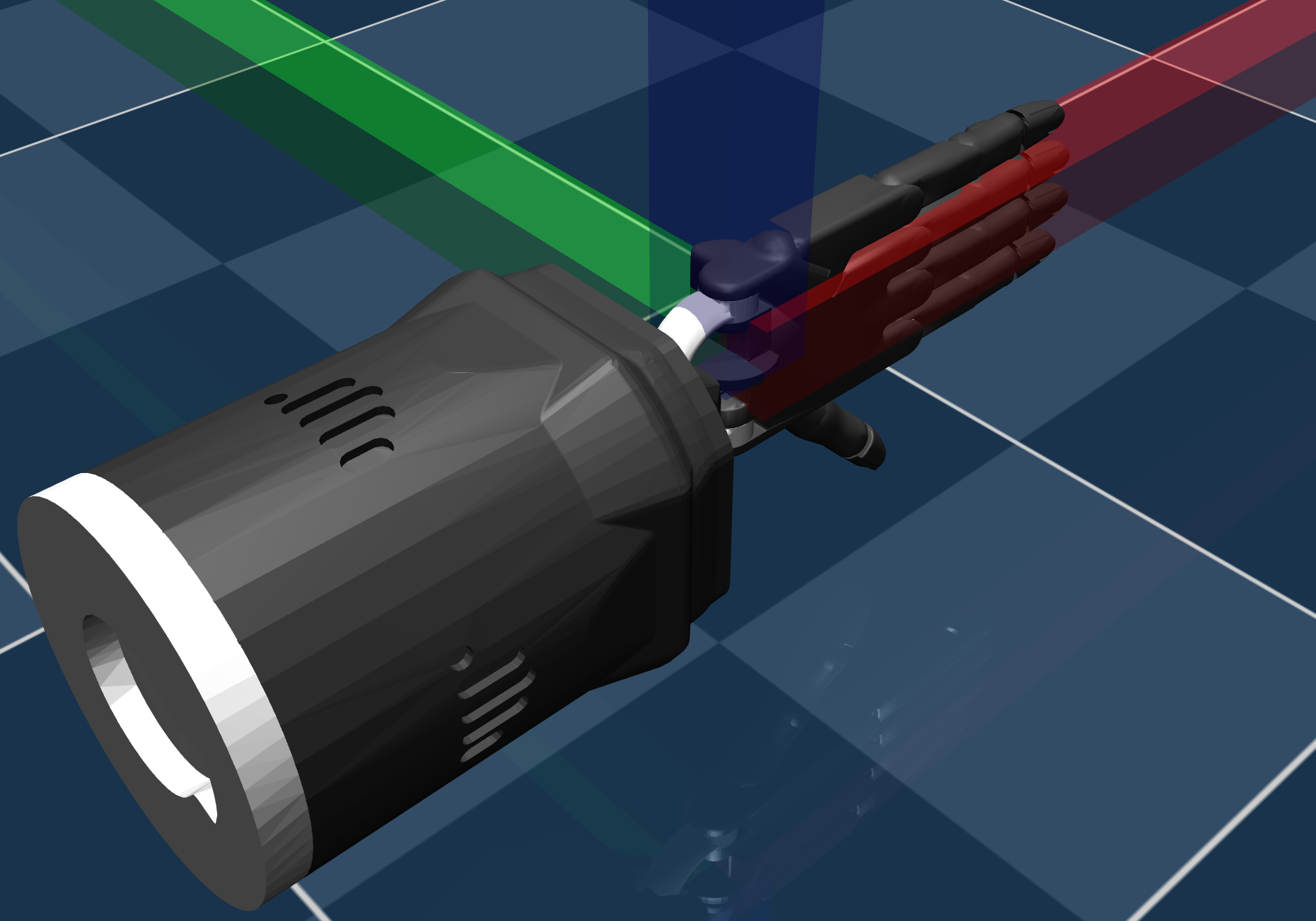

Modifying the XML (right) simplified the code by eliminating many pose transformations. What was left to solve in code was only scale and offset required to match hand tracking data to mocap bodies.

Welding mocap bodies at the distal phalanges (left) gets the job done, but one manipulates thinking on were the finger tips are. So, welding the mocaps at the finger tips (right) results in more intuitive teleoperation and finer dexterity.

From coarse to fine. On the right, you can see the Shadow Hand not only meet fingertip to fingertip, but do it in two different ways—curving the fingers, then keeping them straight.

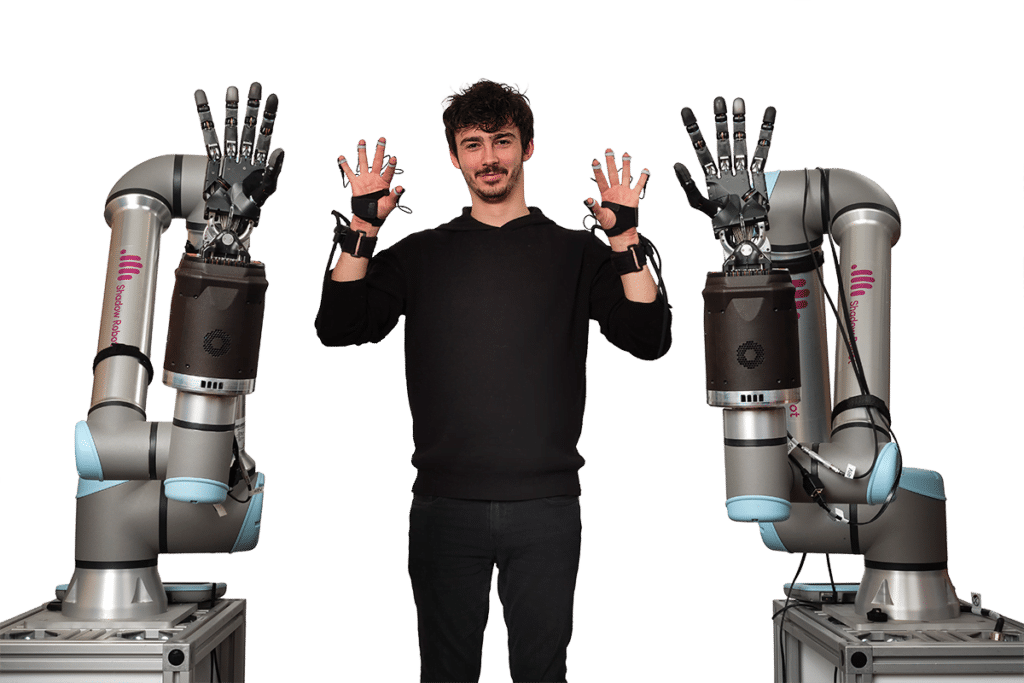

$200K setup versus our $2K setup!