Our Failed Bet on Simulation Data

Dima Yanovsky, Katya Tiukhtikova

Prox, MIT

yanovsky@mit.edu, katarint@mit.edu

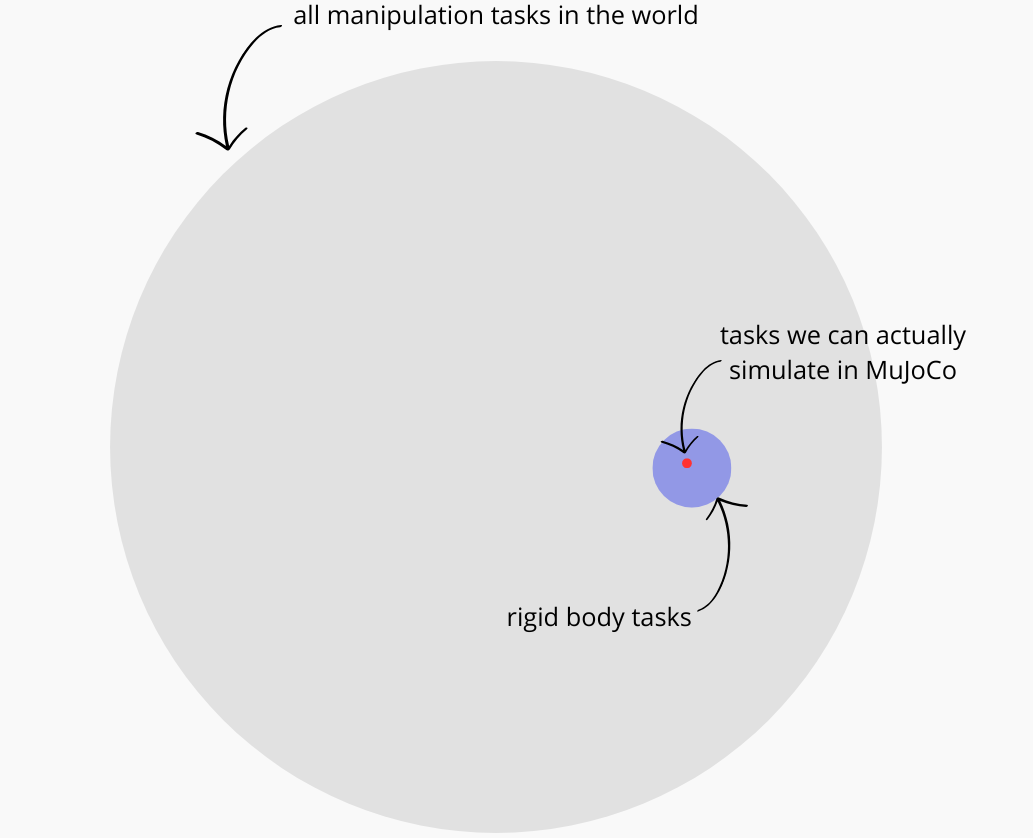

Imagine you know nothing about robot machine learning and you decided to get into the field. Anyone's enthusiastic start with machine learning for robotics very quickly gets hit with a sobering realization that there is pretty much no data to train models on. There is no GitHub for robotics. No Wikipedia. No Youtube. The next thing that usually comes to mind is: "How do I bypass the need to collect real robot data?", which will most likely lead you to the following answer: “I’ll just collect data in simulation.” We ourselves bet on this idea, spending the past 8 months developing infrastructure for teleoperation & data collection in simulation in hopes of building the Scale AI for robotics. This ended up being an incorrect bet.

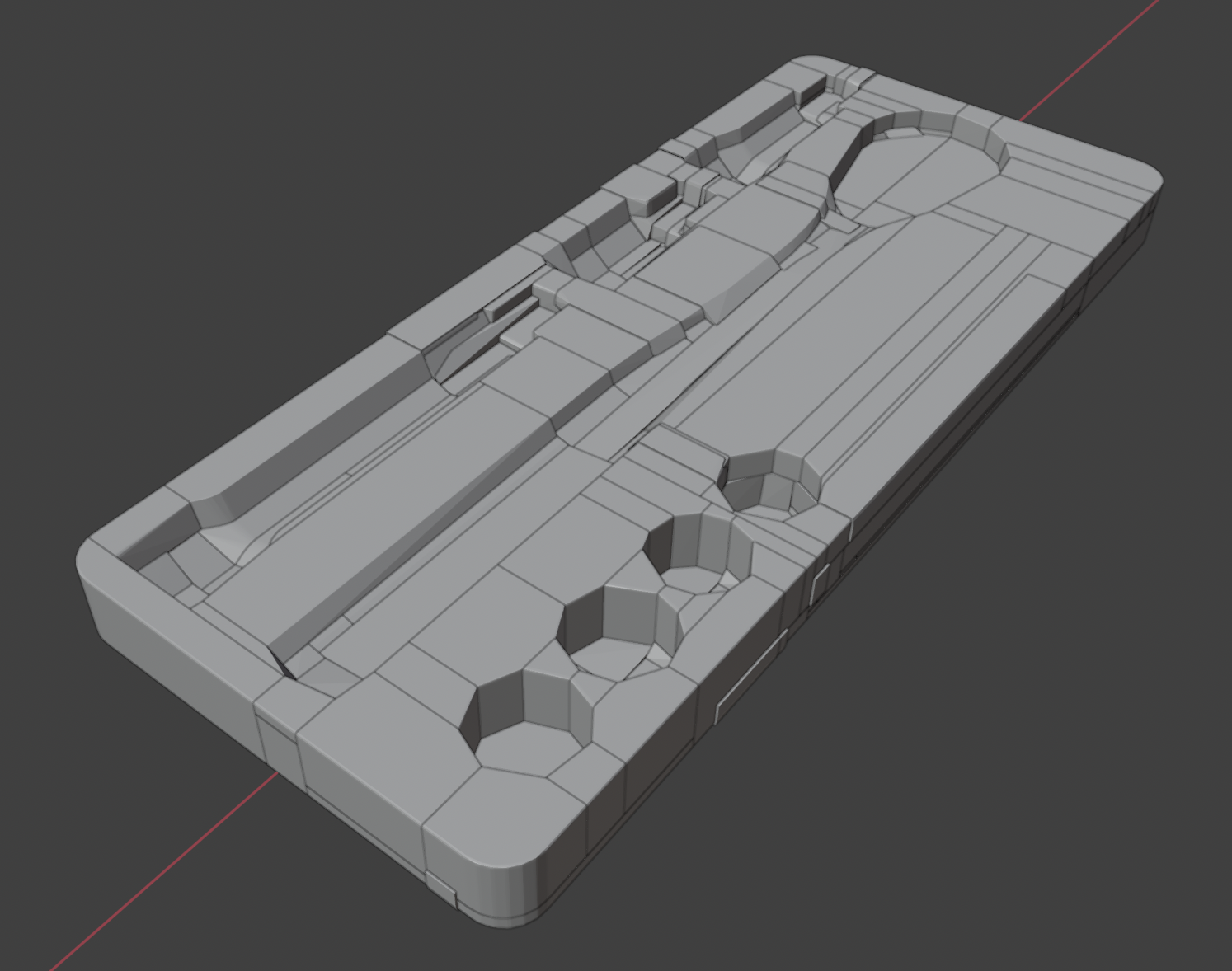

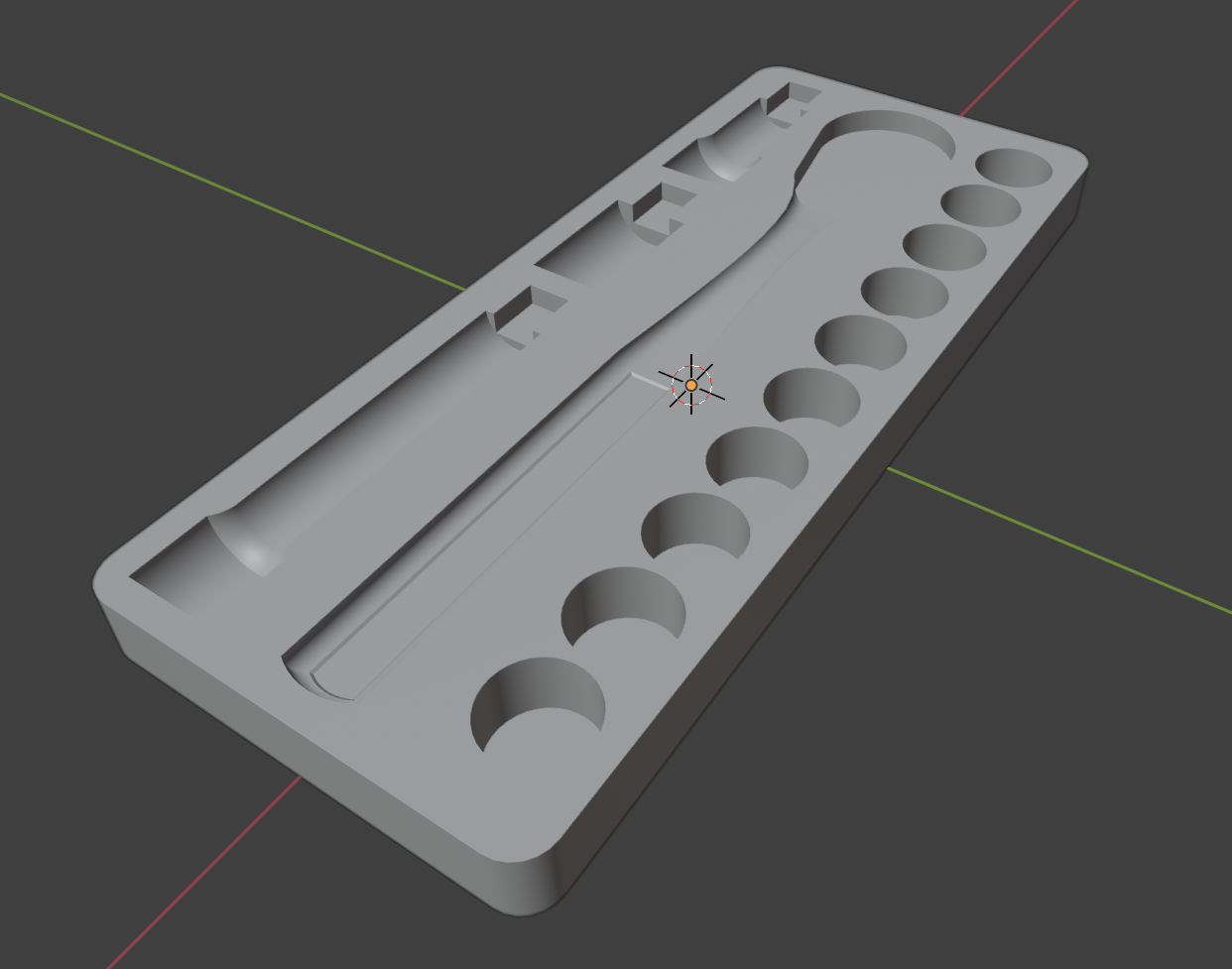

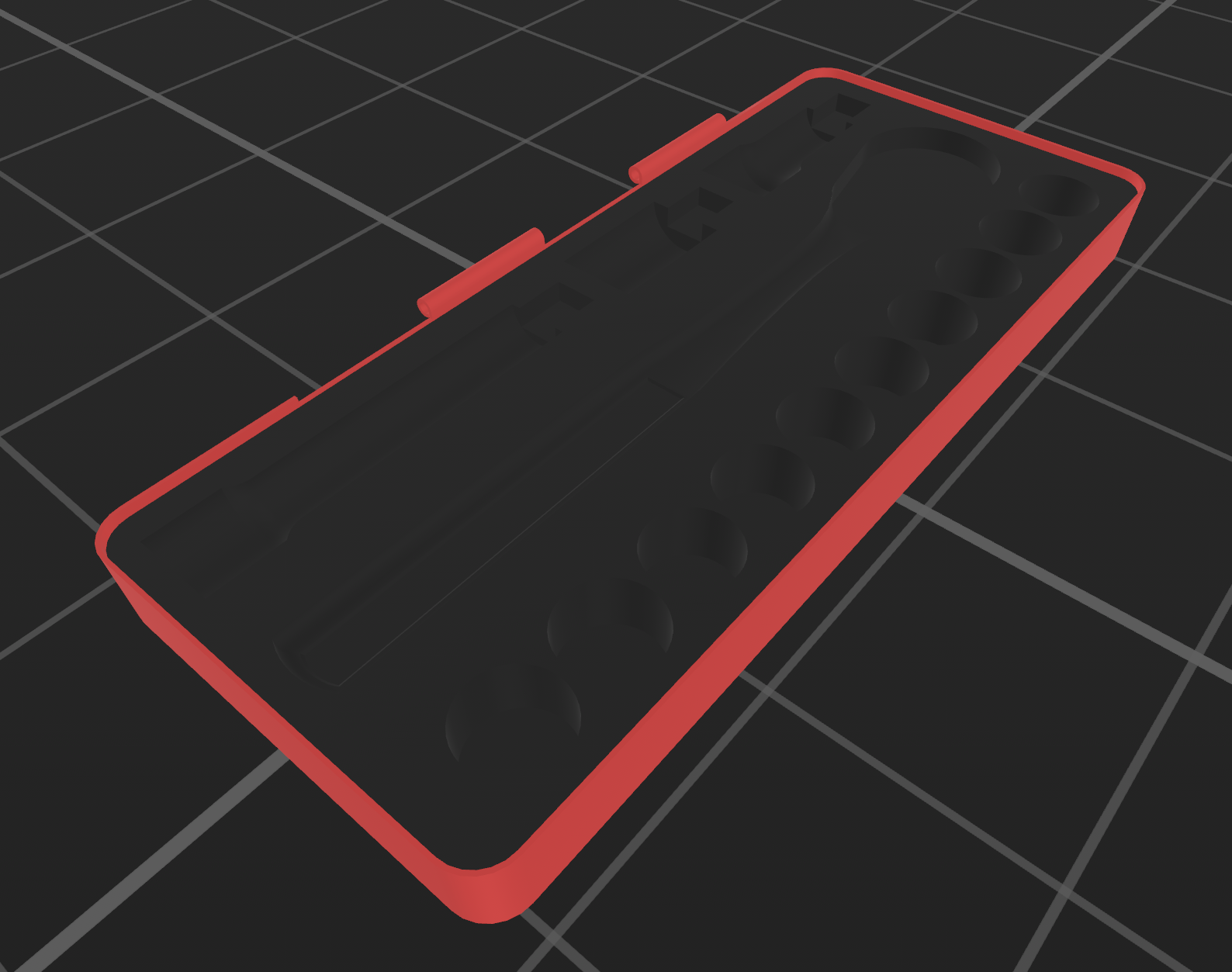

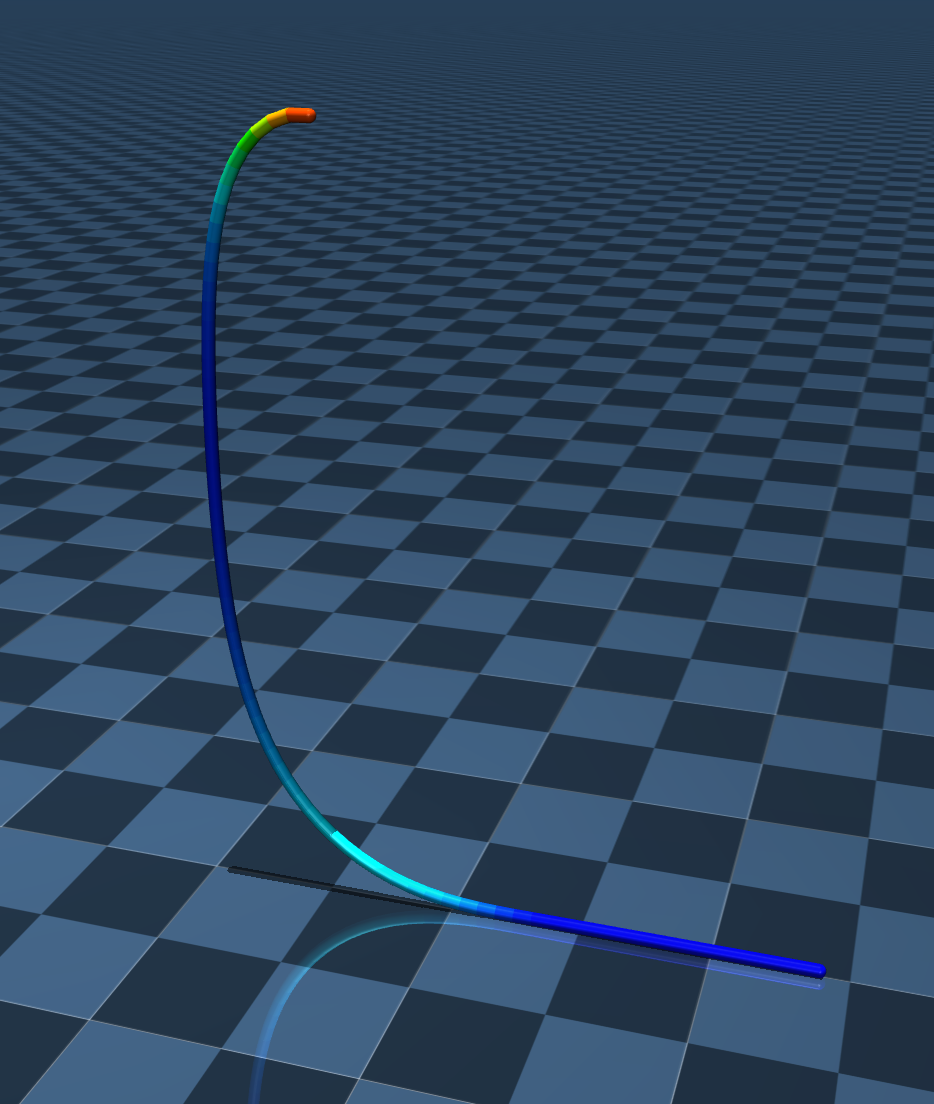

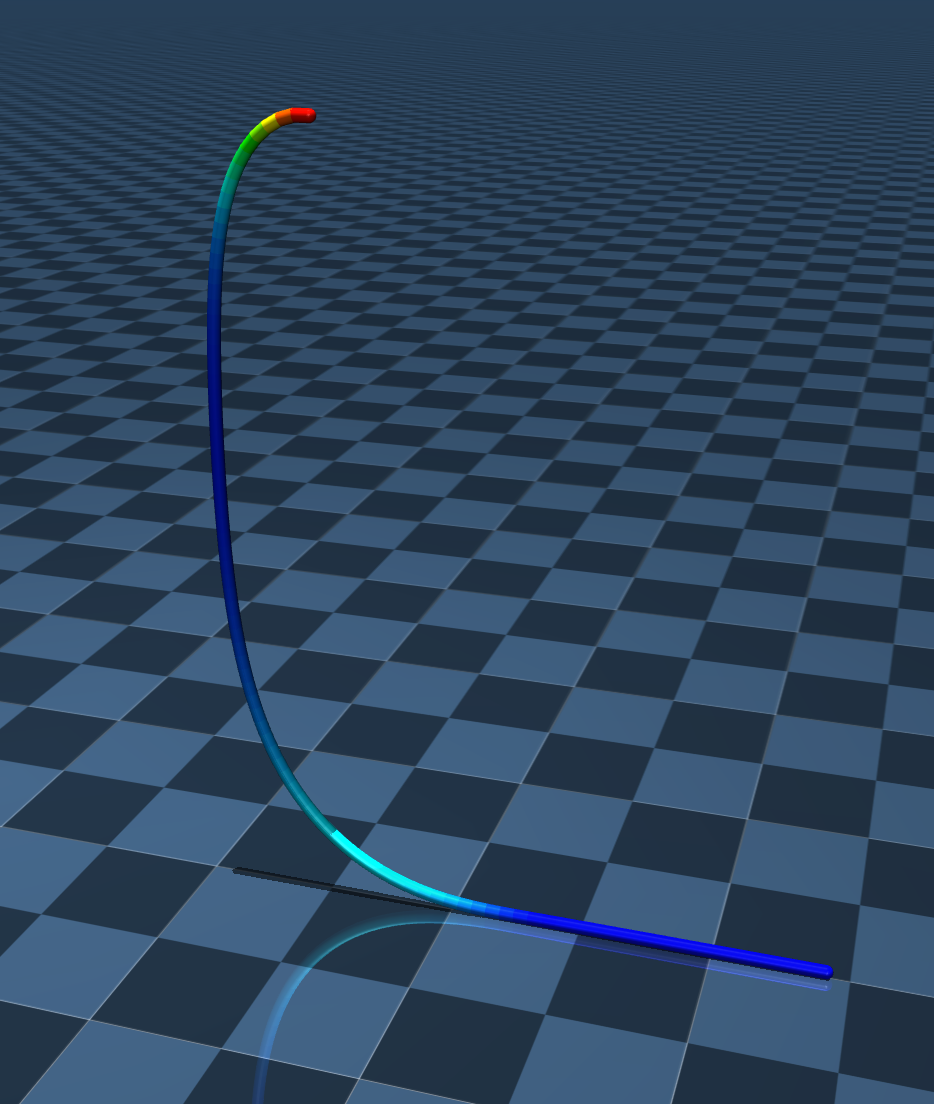

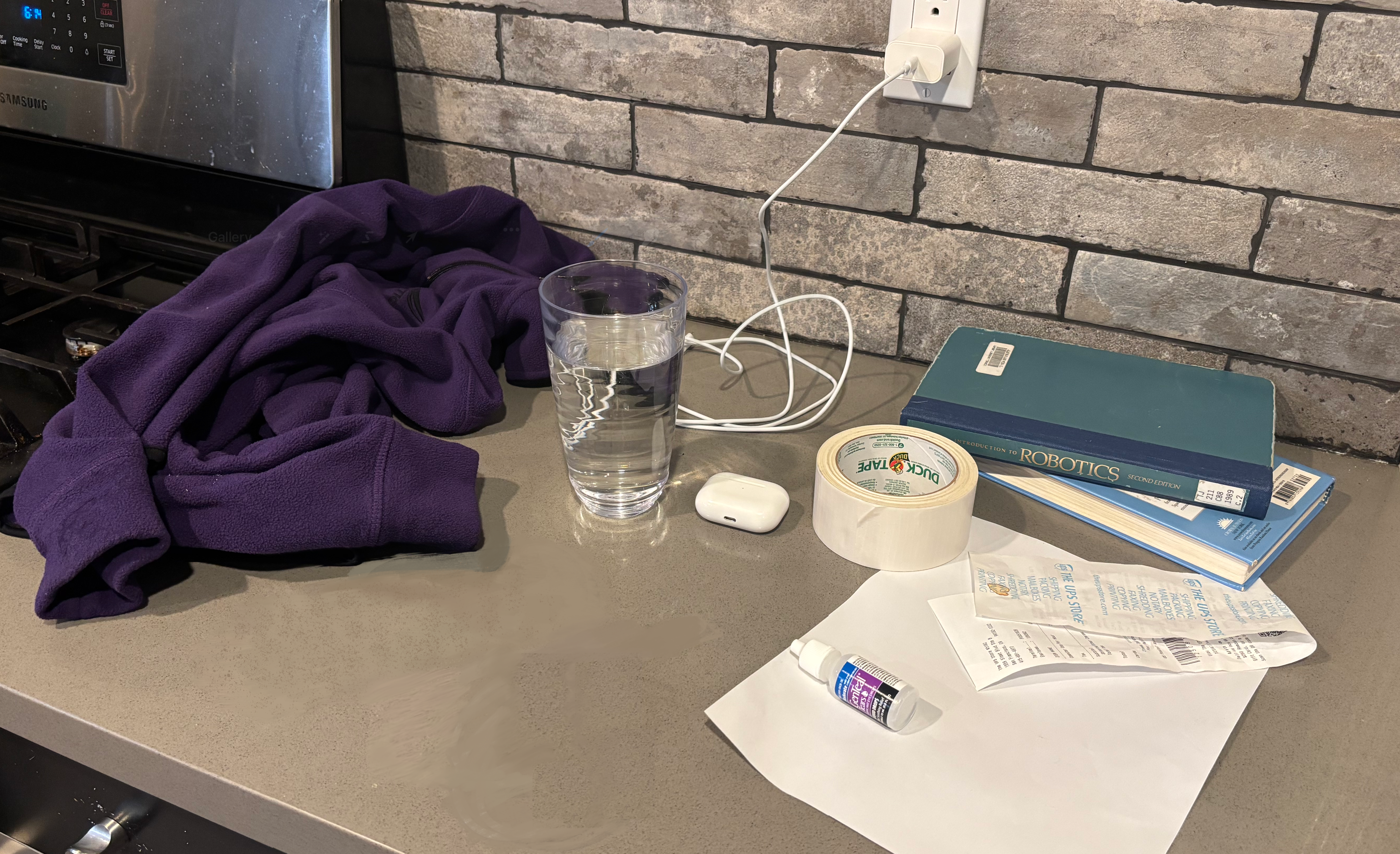

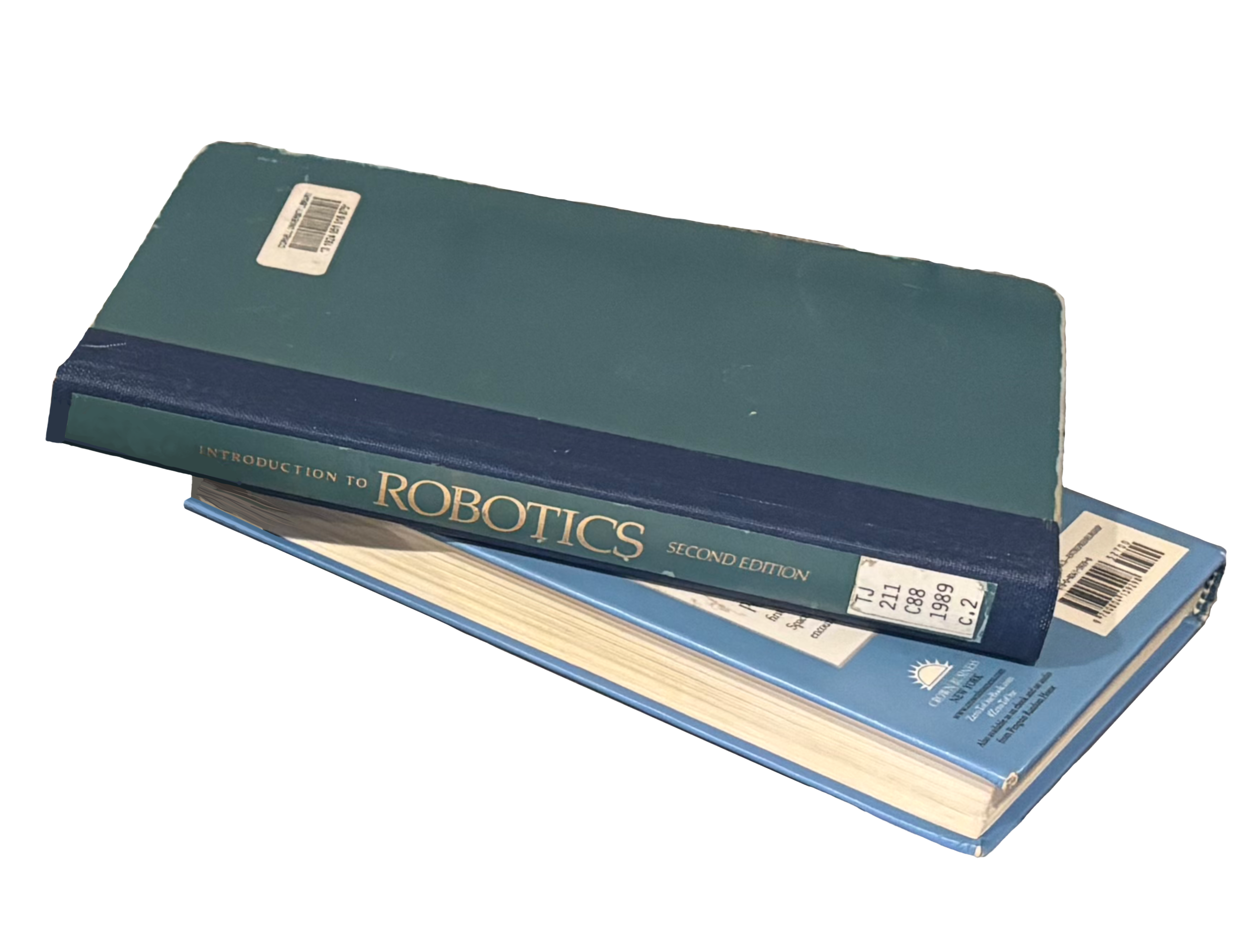

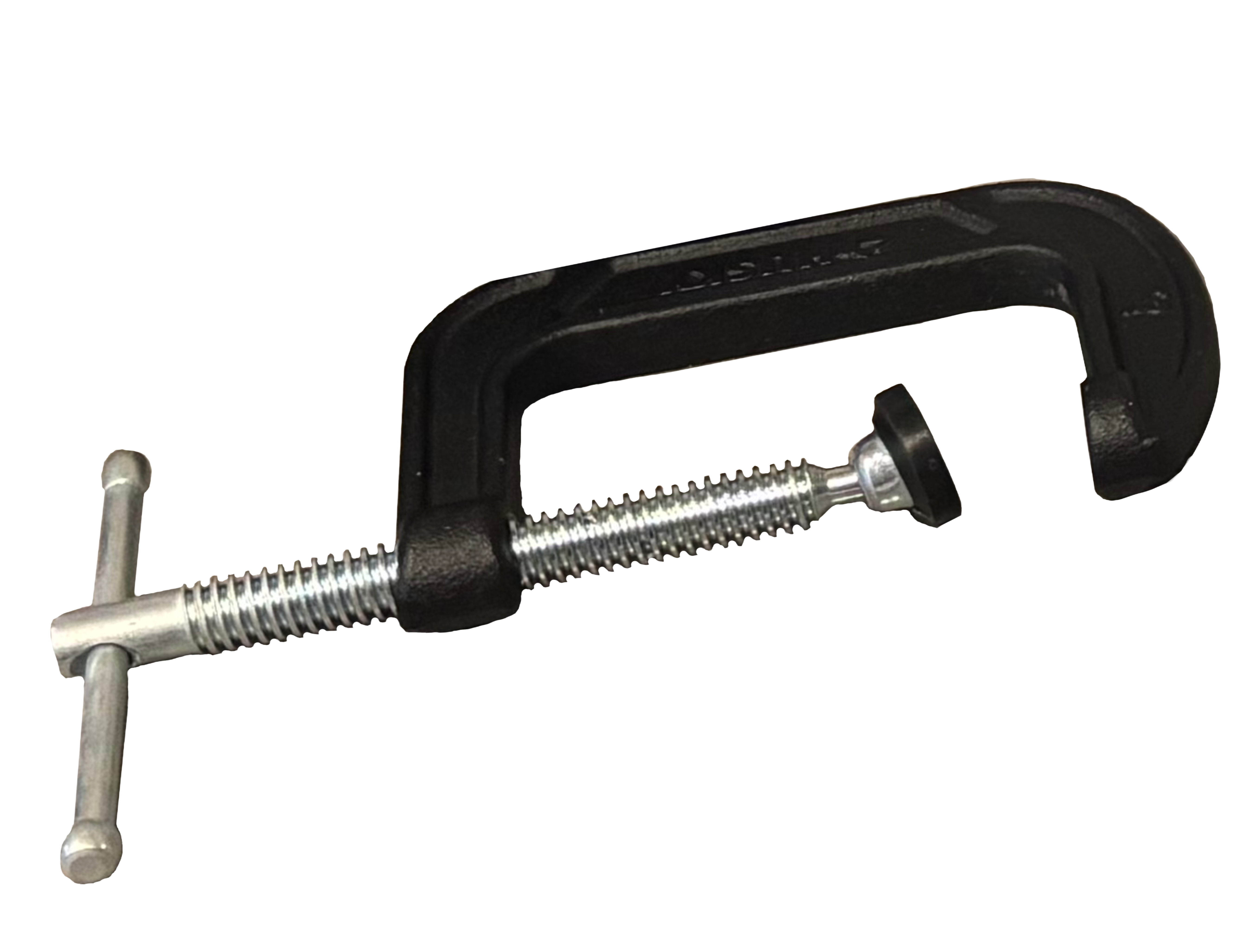

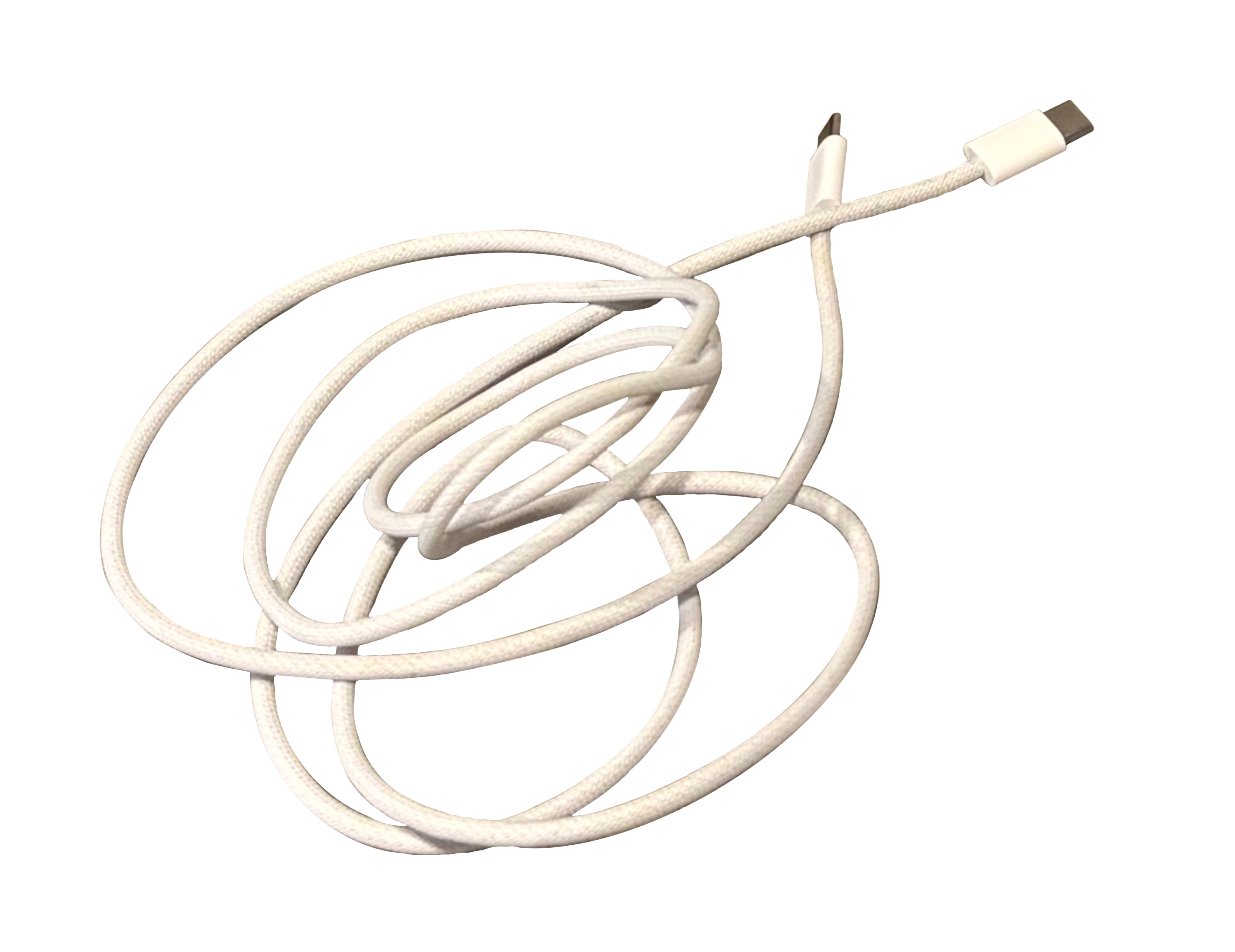

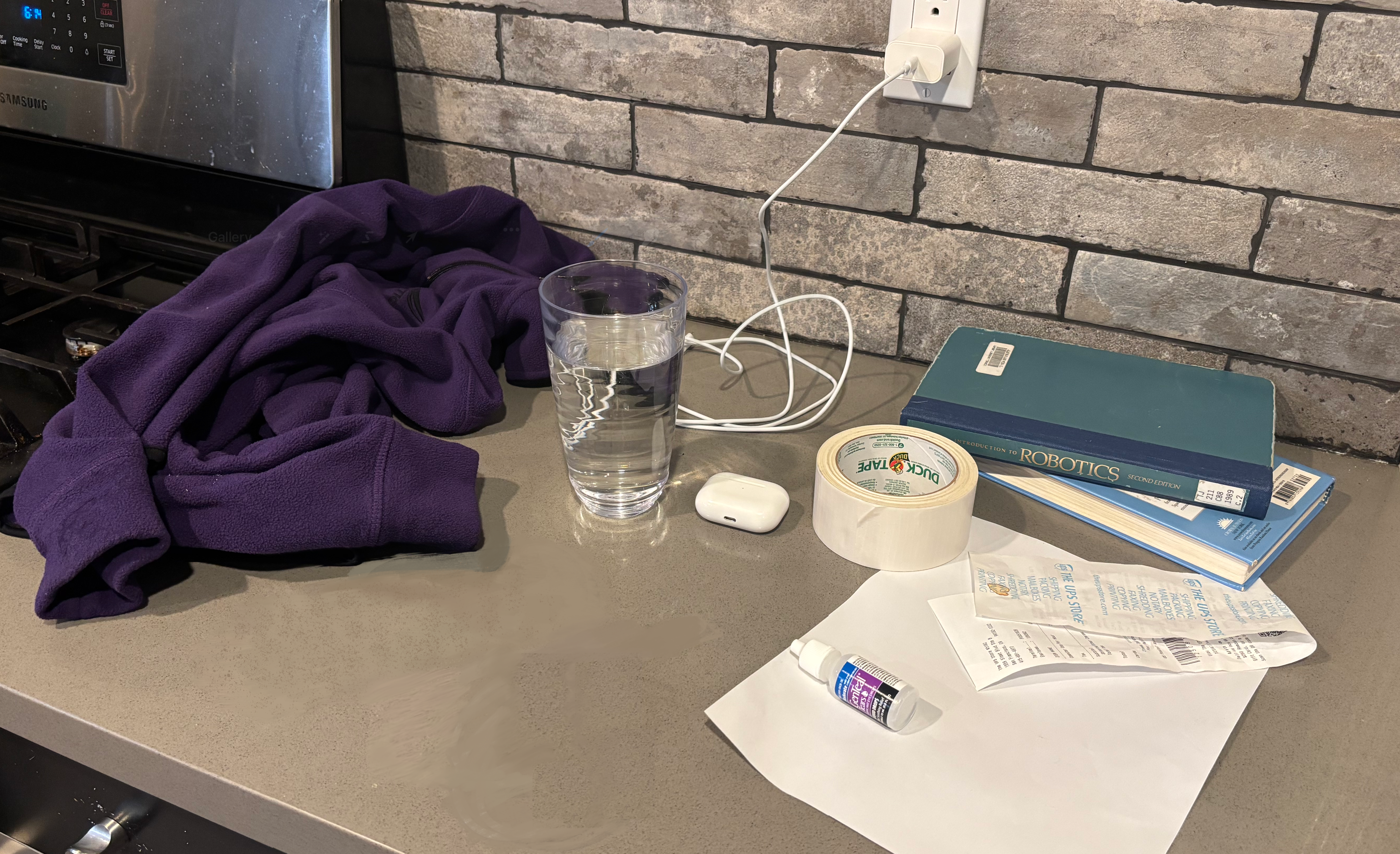

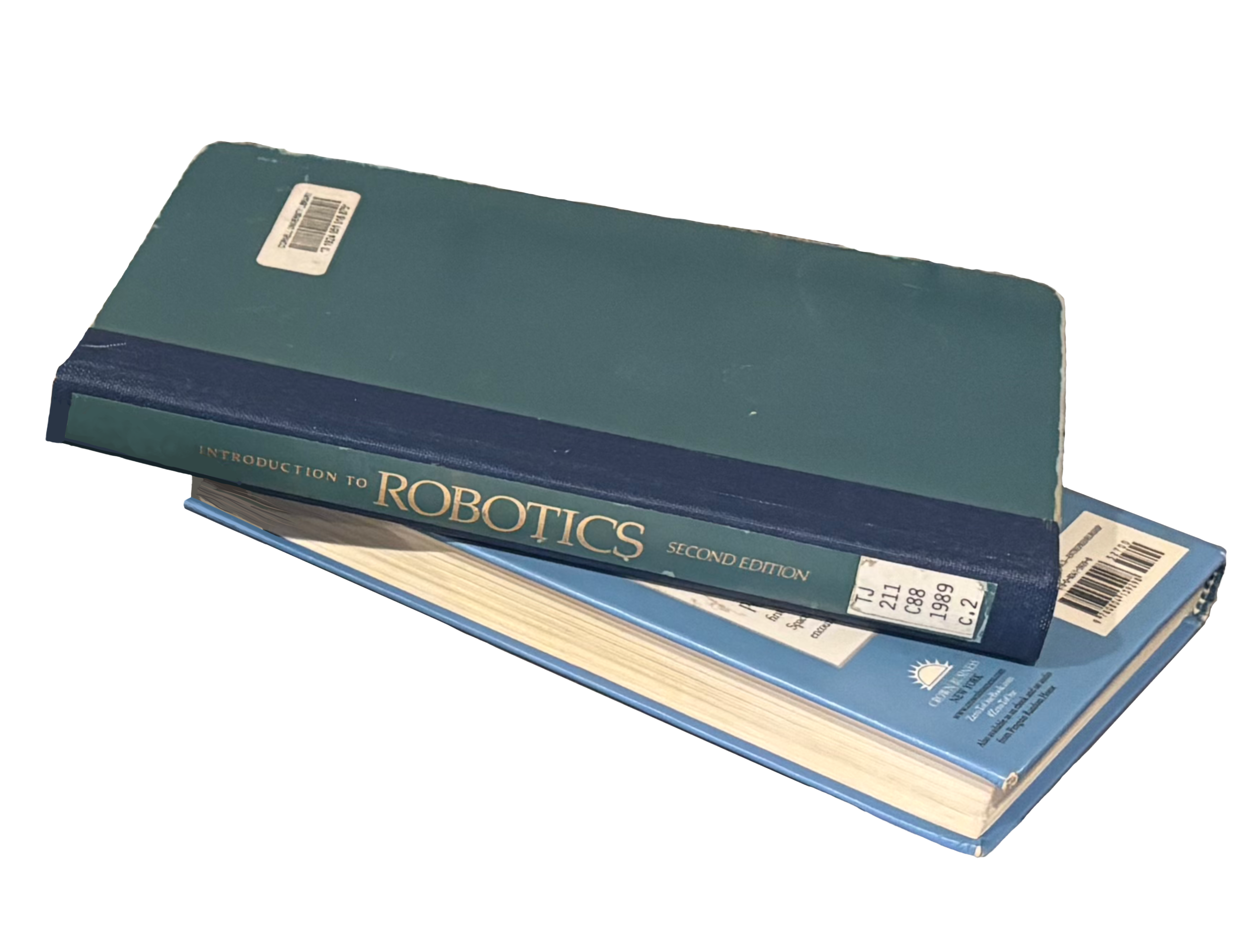

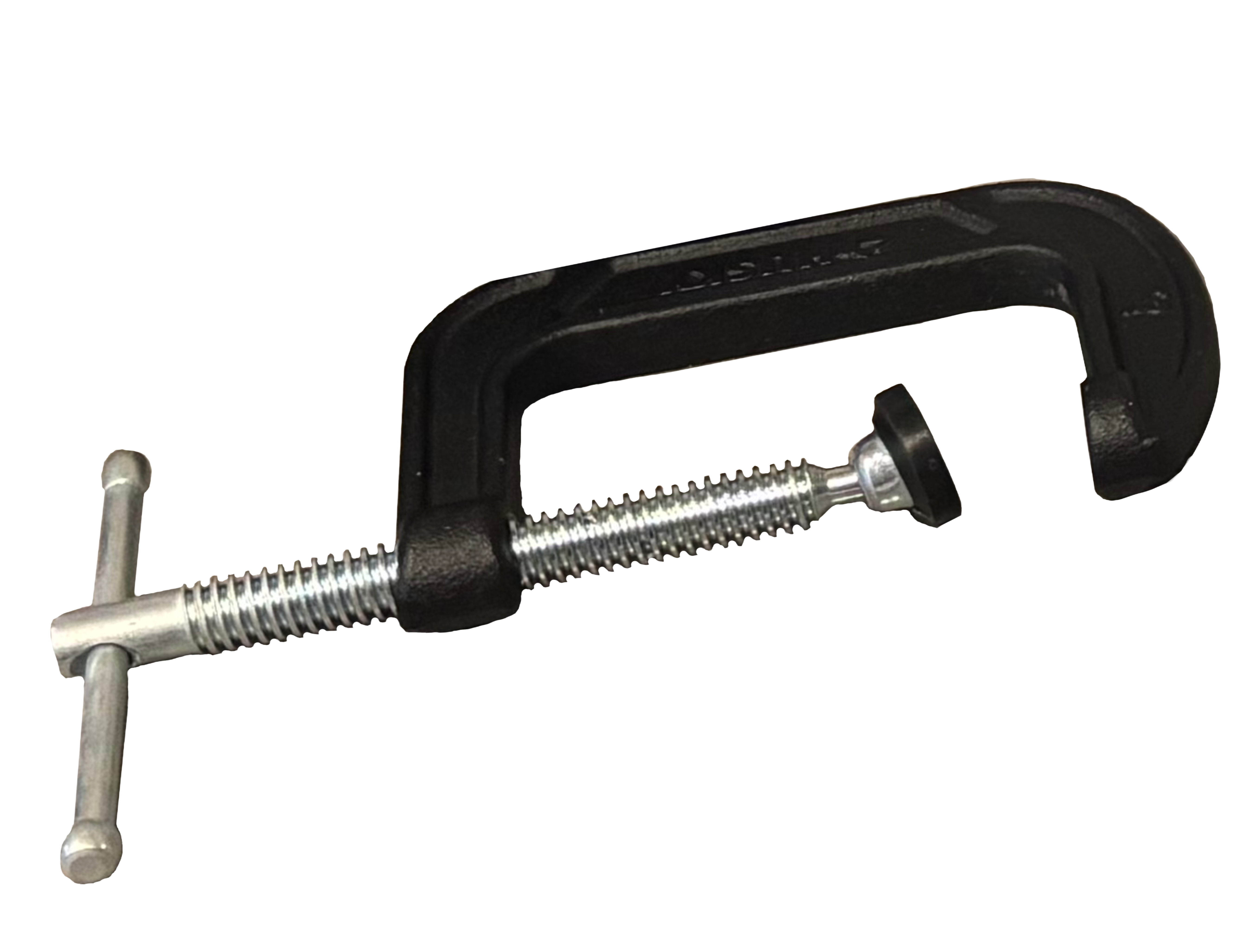

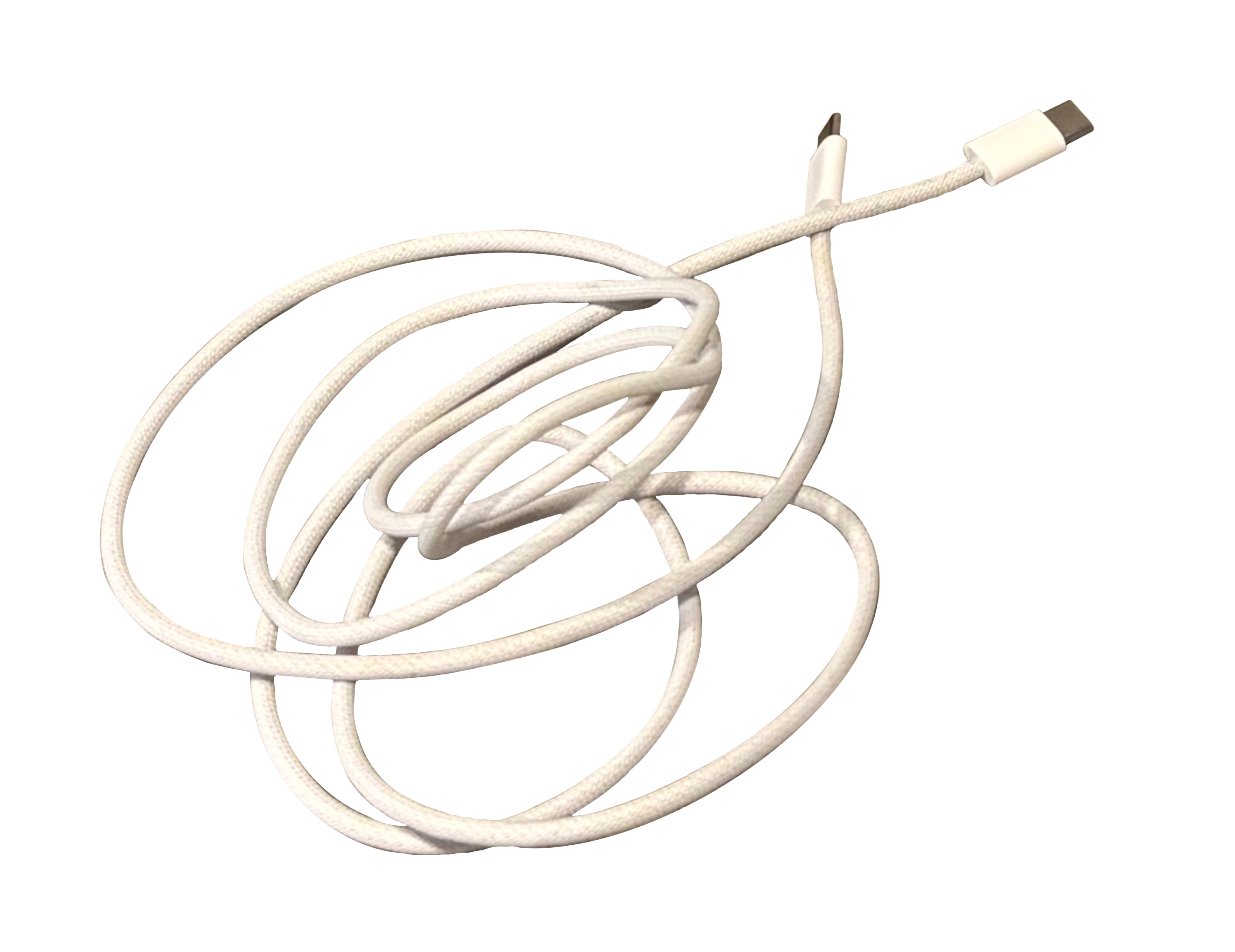

Before diving deep in, let's do a quick guessing game. Here is a picture of our table with objects we use every single day. They don't look too complicated, right?

Let's put this desk in simulation and teleoperate it then! Guess how many objects from this table can be put in sim.

Let's put this desk in simulation and teleoperate it then! Guess how many objects from this table can be put in sim.

Now, once you've placed your bets, let's start.

Before diving deep in, let's do a quick guessing game. Here is a picture of our table with objects we use every single day. They don't look too complicated, right?

Now, once you've placed your bets, let's start.